Ever stared at your computer and wondered how it juggles a dozen apps without breaking a sweat? Welcome to the fascinating world of operating system concepts. Today, we’ll break down four terms that confuse even seasoned developers: multiprogramming, multitasking, multithreading, and multiprocessing.

- The Basics of CPU Management

- What is Multiprogramming?

- The Core Concept

- How Multiprogramming Works

- Key Characteristics of Multiprogramming

- 💖 You Might Also Like

- What is Multitasking?

- The Evolution from Multiprogramming

- Types of Multitasking

- How Multitasking Differs from Multiprogramming

- What is Multithreading?

- Understanding Threads

- Benefits of Multithreading

- Multithreading Challenges

- Real-World Multithreading Examples

- What is Multiprocessing?

- Types of Multiprocessing Systems

- How Multiprocessing Works

- Advantages of Multiprocessing

- ✨ More Stories for You

- Multiprogramming vs Multitasking vs Multithreading vs Multiprocessing: The Ultimate Comparison

- Comparison Table

- Key Differences Explained

- When Should You Use Each Technique?

- Choose Multiprogramming When

- Choose Multitasking When

- Choose Multithreading When

- Choose Multiprocessing When

- Real-World Applications and Examples

- Web Servers

- Databases

- Video Rendering

- Operating Systems

- 🌟 Don't Miss These Posts

- Common Misconceptions Cleared

- Myth 1: Multitasking Means True Parallel Execution

- Myth 2: More Threads Always Mean Better Performance

- Myth 3: Multiprocessing and Multithreading Are the Same

- Myth 4: Multiprogramming Is Obsolete

- The Future of Parallel Computing

- Heterogeneous Computing

- Cloud Computing

- Quantum Computing

- Frequently Asked Questions

- What is the main difference between multitasking and multiprogramming?

- Can multithreading work on single-core processors?

- Why is multiprocessing sometimes better than multithreading?

- Do all applications benefit from multithreading?

- How do modern CPUs support these concepts?

- Conclusion

Don’t worry. By the end of this article, you’ll understand these concepts better than your computer science professor. Let’s dive in!

The Basics of CPU Management

Before we compare these concepts, let’s establish some ground rules. Your CPU (Central Processing Unit) is essentially the brain of your computer. It executes instructions, processes data, and manages resources.

Here’s the catch: CPUs can only do one thing at a time per core. So how does your computer run Spotify, Chrome, and that spreadsheet simultaneously? That’s where these four techniques come into play.

According to the classic textbook “Operating System Concepts” by Silberschatz, Galvin, and Gagne, modern operating systems use sophisticated scheduling algorithms to create the illusion of simultaneous execution.

What is Multiprogramming?

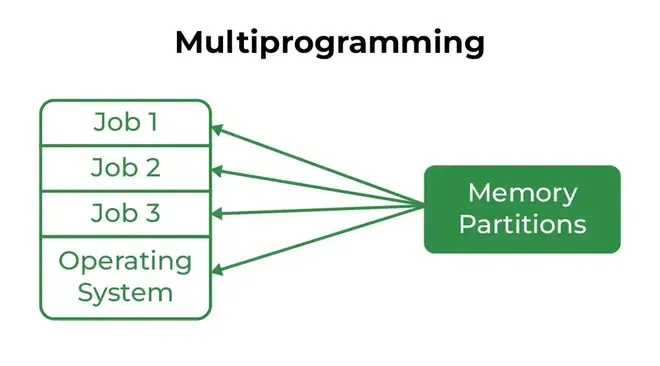

Multiprogramming represents one of the earliest techniques for maximizing CPU utilization. It emerged in the 1960s when computers cost millions of dollars, and wasting CPU cycles felt like burning money.

The Core Concept

In multiprogramming, the operating system loads multiple programs into memory simultaneously. When one program waits for I/O operations (like reading from disk), the CPU switches to another program instead of sitting idle.

Think of it like a chef cooking multiple dishes. While waiting for the pasta to boil, the chef chops vegetables. The chef doesn’t stand there watching water bubble.

How Multiprogramming Works

The process follows these steps:

- The OS loads several programs into main memory

- One program executes until it needs I/O

- The CPU immediately switches to another ready program

- This cycle continues until all programs complete

Key Characteristics of Multiprogramming

- Primary Goal: Maximize CPU utilization

- Switching Trigger: I/O operations or program completion

- User Interaction: Minimal (batch processing oriented)

- Memory Requirement: Multiple programs reside in memory

According to IBM’s documentation on mainframe computing, early multiprogramming systems achieved CPU utilization rates above 90%, compared to 20-30% in single-program environments.

💖 You Might Also Like

What is Multitasking?

Multitasking evolved from multiprogramming to address user interactivity. If multiprogramming is the serious older sibling, multitasking is the people-pleaser who wants everyone happy.

The Evolution from Multiprogramming

While multiprogramming focuses on CPU efficiency, multitasking prioritizes user experience. It creates the illusion that multiple applications run simultaneously, even on a single-core processor.

Types of Multitasking

Preemptive Multitasking

The operating system forcibly takes control from running processes. Modern systems like Windows, macOS, and Linux use preemptive multitasking.

The OS allocates time slices (typically 10-100 milliseconds) to each process. When a slice expires, the OS suspends the current process and switches to another. This happens so fast that humans perceive simultaneous execution.

Cooperative Multitasking

Processes voluntarily yield control to other processes. Windows 3.1 and early Mac OS used this approach.

The problem? One misbehaving application could freeze the entire system. We’ve all experienced that spinning wheel of doom. That’s often a remnant of cooperative multitasking concepts failing.

How Multitasking Differs from Multiprogramming

| Aspect | Multiprogramming | Multitasking |

|---|---|---|

| Primary Focus | CPU utilization | User responsiveness |

| Switching Mechanism | I/O wait | Time slicing |

| User Interaction | Minimal | High |

| Era of Origin | 1960s | 1980s-1990s |

Microsoft’s Windows documentation explains that modern Windows versions use a priority-based preemptive scheduler that balances responsiveness with throughput.

What is Multithreading?

Now we’re getting into the good stuff. Multithreading takes concurrency inside individual applications. Instead of running multiple programs, we run multiple execution paths within a single program.

Understanding Threads

A thread represents the smallest unit of execution within a process. Every process has at least one thread (the main thread). Developers can create additional threads to perform parallel operations.

Imagine a restaurant. The restaurant (process) has multiple waiters (threads). Each waiter handles different tables simultaneously. They share the same kitchen (memory) and resources.

Benefits of Multithreading

Improved Performance: Applications complete tasks faster by dividing work among threads.

Better Resource Utilization: Threads share memory space, reducing overhead compared to multiple processes.

Enhanced Responsiveness: One thread can handle user input while another performs background calculations.

Simplified Communication: Threads within the same process share data easily without complex inter-process communication.

Multithreading Challenges

Nothing comes free, including multithreading. Developers face significant challenges:

Race Conditions

When two threads access shared data simultaneously, unpredictable results occur. The Java documentation warns developers about proper synchronization to prevent race conditions.

Deadlocks

Two or more threads wait indefinitely for each other to release resources. Picture two people in a narrow hallway, each waiting for the other to move first. Neither moves. Both stay stuck.

Complexity

Multithreaded code is harder to write, debug, and maintain. Even experienced developers make threading mistakes.

Real-World Multithreading Examples

- Web Browsers: Separate threads handle rendering, JavaScript execution, and network requests

- Video Games: Different threads manage graphics, physics, and AI

- Word Processors: One thread handles typing while another auto-saves in the background

According to Oracle’s Java Concurrency tutorial, properly implemented multithreading can improve application performance by 2-4x on multi-core processors.

What is Multiprocessing?

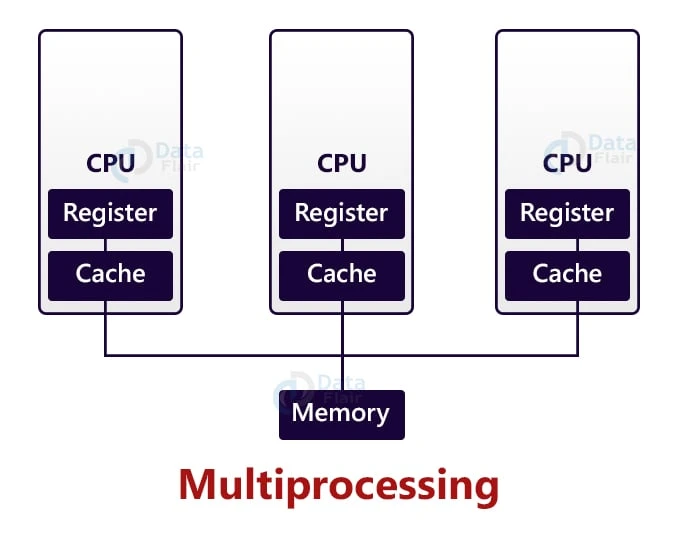

Multiprocessing represents the hardware solution to parallel execution. While multithreading operates at the software level, multiprocessing involves multiple physical or logical CPUs working together.

Types of Multiprocessing Systems

Symmetric Multiprocessing (SMP)

All processors share the same memory and have equal access to I/O devices. Modern desktop computers and servers typically use SMP architecture.

Each processor runs any process or thread. The operating system distributes workload across all available processors.

Asymmetric Multiprocessing (AMP)

One master processor controls the system and assigns tasks to subordinate processors. This approach was common in older systems.

How Multiprocessing Works

In a multiprocessing system:

- Multiple CPUs or cores exist physically

- The OS scheduler assigns processes/threads to different processors

- True parallel execution occurs (not just the illusion)

- Tasks complete faster through actual simultaneous processing

Advantages of Multiprocessing

True Parallelism: Unlike multitasking’s illusion, multiprocessing offers genuine simultaneous execution.

Increased Throughput: More processors mean more work gets done per second.

Fault Tolerance: If one processor fails, others can continue operating.

Scalability: Adding processors increases system capacity.

Intel’s developer documentation highlights that modern processors like the Core i9 series offer up to 24 cores, enabling massive parallel processing capabilities.

✨ More Stories for You

Multiprogramming vs Multitasking vs Multithreading vs Multiprocessing: The Ultimate Comparison

Let’s put everything together in a comprehensive comparison.

Comparison Table

| Feature | Multiprogramming | Multitasking | Multithreading | Multiprocessing |

|---|---|---|---|---|

| Level | Operating System | Operating System | Application | Hardware |

| Primary Goal | CPU Utilization | User Experience | Application Performance | System Performance |

| Execution Unit | Process | Process | Thread | Process/Thread |

| Memory Sharing | Separate | Separate | Shared | Separate/Shared |

| True Parallelism | No | No | Possible | Yes |

| Complexity | Low | Medium | High | High |

Key Differences Explained

Scope of Operation

Multiprogramming and multitasking operate at the operating system level. They manage how processes share system resources.

Multithreading operates at the application level. Developers implement threading within their programs.

Multiprocessing operates at the hardware level. It requires multiple physical or logical processors.

Resource Sharing

In multiprogramming and multitasking, each process has separate memory space. Processes communicate through inter-process communication (IPC) mechanisms.

Threads in multithreading share memory space within their parent process. This makes communication faster but requires careful synchronization.

Multiprocessing can work with both shared and distributed memory architectures.

Performance Impact

Multiprogramming improves CPU utilization by reducing idle time during I/O operations.

Multitasking improves perceived performance through responsive applications.

Multithreading improves actual performance by utilizing multiple cores for single applications.

Multiprocessing improves overall system throughput through genuine parallel execution.

When Should You Use Each Technique?

Understanding when to apply each concept separates good developers from great ones.

Choose Multiprogramming When

- Running batch processing jobs

- Maximizing server resource utilization

- Managing multiple independent background services

Choose Multitasking When

- Building interactive applications

- Developing operating systems

- Creating responsive user interfaces

Choose Multithreading When

- Processing large datasets

- Building web servers handling multiple connections

- Developing applications that benefit from parallel execution

- Creating responsive GUI applications

Choose Multiprocessing When

- Running computationally intensive tasks

- Building distributed systems

- Processing requires fault tolerance

- Applications need isolation between execution units

The Python documentation recommends multiprocessing over multithreading for CPU-bound tasks due to the Global Interpreter Lock (GIL) limitation.

Real-World Applications and Examples

Theory is great, but let’s see these concepts in action.

Web Servers

Apache and Nginx use combinations of these techniques. Apache traditionally used process-based (multiprocessing) models, while Nginx uses event-driven (multithreading) approaches.

Modern web servers often combine multithreading for handling connections with multiprocessing for utilizing multiple cores.

Databases

Database management systems like MySQL and PostgreSQL use multithreading extensively. Each client connection typically gets its own thread.

For heavy workloads, databases leverage multiprocessing through parallel query execution across multiple cores.

Video Rendering

Software like Adobe Premiere and DaVinci Resolve utilizes both multithreading and multiprocessing. Different frames render simultaneously across multiple threads and processors.

Operating Systems

Modern operating systems like Linux, Windows, and macOS use all four concepts:

- Multiprogramming keeps CPU busy

- Multitasking enables user interaction

- Multithreading improves application responsiveness

- Multiprocessing utilizes available hardware

🌟 Don't Miss These Posts

Common Misconceptions Cleared

Let’s address some confusion that plagues even experienced professionals.

Myth 1: Multitasking Means True Parallel Execution

False. On a single-core processor, multitasking rapidly switches between tasks. It creates an illusion of parallelism through fast context switching.

Myth 2: More Threads Always Mean Better Performance

False. Threads have overhead. Creating too many threads can actually slow down applications. The optimal number depends on the task type and available cores.

Myth 3: Multiprocessing and Multithreading Are the Same

False. Multiprocessing involves multiple processors. Multithreading involves multiple execution paths within a process. They’re complementary but distinct.

Myth 4: Multiprogramming Is Obsolete

False. Modern systems still use multiprogramming principles. Server workloads particularly benefit from multiprogramming concepts.

The Future of Parallel Computing

The computing landscape continues evolving rapidly.

Heterogeneous Computing

Modern systems combine different processor types. CPUs work alongside GPUs, TPUs, and specialized accelerators. This requires sophisticated scheduling across diverse hardware.

Cloud Computing

Distributed systems across multiple machines extend multiprocessing concepts. Containerization and microservices architecture build upon these fundamental principles.

Quantum Computing

Quantum computers operate on entirely different principles. However, classical computing concepts will remain relevant for decades as hybrid systems emerge.

According to research published in ACM Computing Surveys, understanding these classical concepts remains essential even as computing paradigms shift.

Frequently Asked Questions

What is the main difference between multitasking and multiprogramming?

Multitasking focuses on user responsiveness through time-slicing, while multiprogramming focuses on CPU utilization by switching during I/O waits.

Can multithreading work on single-core processors?

Yes. Multithreading provides benefits even on single-core processors through concurrent (interleaved) execution. However, true parallelism requires multiple cores.

Why is multiprocessing sometimes better than multithreading?

Multiprocessing provides better isolation between execution units. If one process crashes, others continue running. Languages with limitations like Python’s GIL also perform better with multiprocessing for CPU-bound tasks.

Do all applications benefit from multithreading?

No. Applications with sequential dependencies or minimal CPU usage don’t benefit significantly. The overhead of thread management can actually reduce performance in such cases.

How do modern CPUs support these concepts?

Modern CPUs include multiple cores (supporting multiprocessing) and hyper-threading technology (supporting efficient multithreading). Operating systems utilize these hardware features to implement multitasking and multiprogramming.

Conclusion

Understanding multiprogramming, multitasking, multithreading, and multiprocessing isn’t just academic exercise. These concepts form the backbone of modern computing.

Multiprogramming maximizes CPU utilization. Multitasking enhances user experience. Multithreading improves application performance. Multiprocessing enables true parallel execution.

Each technique serves specific purposes. Smart developers and system architects choose the right approach based on requirements, hardware capabilities, and application constraints.

The next time your computer runs multiple applications smoothly, you’ll understand the sophisticated dance happening behind the scenes. These concepts work together, creating the seamless computing experience we often take for granted.

Keep exploring, keep learning, and remember: understanding fundamentals always pays dividends in technology careers.

Sources Referenced:

- Silberschatz, A., Galvin, P. B., & Gagne, G. “Operating System Concepts”

- Oracle Java Documentation – Concurrency Tutorial

- Microsoft Windows Documentation – Process and Thread Functions

- Intel Developer Documentation

- Python Official Documentation – multiprocessing module

- IBM Mainframe Computing Documentation

- ACM Computing Surveys